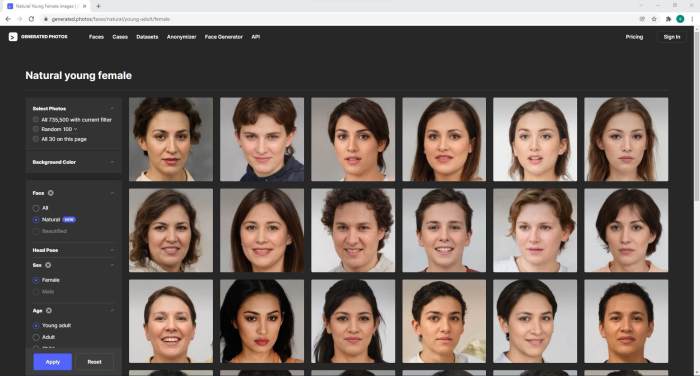

Website uses AI to continuously generate fake faces – it sounds like something out of a sci-fi movie, right? But this isn’t fiction; it’s a rapidly evolving reality with serious ethical, legal, and societal implications. This seemingly innocuous website raises crucial questions about the future of online identity, the erosion of trust, and the potential for widespread misuse. From deepfakes to identity theft, the possibilities are both fascinating and deeply unsettling.

The technology behind these AI-generated faces is surprisingly sophisticated, employing complex algorithms and massive datasets to create incredibly realistic images. This article delves into the technical aspects of this technology, exploring the methods used, the challenges in detection, and the potential for misuse. We’ll also examine the legal and regulatory frameworks currently in place (or lacking) to address this burgeoning issue and discuss the urgent need for proactive measures to mitigate the risks.

Technical Aspects of AI Fake Face Generation: Website Uses Ai To Continuously Generate Fake Faces

Generating realistic fake faces using AI is no longer science fiction; it’s a rapidly evolving field with significant implications across various sectors. The technology behind these eerily lifelike creations is complex, blending advanced algorithms and massive datasets to produce convincing results. This section delves into the technical intricacies of this fascinating process.

At its core, AI-powered fake face generation relies on deep learning, specifically Generative Adversarial Networks (GANs) and autoencoders. GANs, in particular, have revolutionized the field. They consist of two neural networks locked in a constant game of cat and mouse: a generator that creates fake faces and a discriminator that tries to distinguish real faces from fakes. This adversarial training process pushes both networks to improve, resulting in increasingly realistic outputs from the generator.

Generative Adversarial Networks (GANs) and Autoencoders, Website uses ai to continuously generate fake faces

GANs are the workhorses of AI fake face generation. The generator network starts with random noise and transforms it into an image. The discriminator, trained on a vast dataset of real faces, evaluates the generated image and provides feedback to the generator. This feedback loop continues iteratively, refining the generator’s ability to create convincingly realistic faces. Autoencoders, on the other hand, learn compressed representations of faces and can be used to generate variations or modify existing images. They work by compressing the input image into a lower-dimensional representation (latent space) and then reconstructing it. By manipulating the latent space, we can generate new faces with subtle variations.

Datasets and Training Methods

The quality of AI-generated faces is heavily reliant on the quality and quantity of the training data. Massive datasets comprising millions of real human faces are crucial. These datasets are often sourced from publicly available image repositories, with careful attention paid to issues of bias and privacy. The training process involves feeding these images to the GAN or autoencoder, allowing the network to learn the underlying patterns and characteristics of human faces. Techniques like data augmentation (applying transformations like rotations and flips to existing images) are used to increase the size and diversity of the training data, leading to more robust and generalizable models.

Step-by-Step Generation of a Fake Face using StyleGAN2

Let’s illustrate the process using StyleGAN2, a popular GAN architecture known for generating high-quality fake faces. First, random noise is fed into the generator network. This noise acts as the initial seed for the face generation process. The generator then passes this noise through multiple layers of convolutional neural networks, progressively building up the details of the face from coarse features to fine details like hair, eyes, and skin texture. The generated image is then fed to the discriminator, which assesses its realism. The feedback from the discriminator is used to adjust the weights of the generator network, improving its ability to create more realistic faces. This iterative process continues until a satisfactory level of realism is achieved. The final output is a high-resolution image of a fake face that looks incredibly lifelike.

Comparison of AI Models for Fake Face Generation

Several AI models excel at generating fake faces, each with its strengths and weaknesses. The quality and realism vary depending on the architecture, training data, and training methods employed.

- StyleGAN2: Known for producing high-resolution, incredibly realistic images with fine details. However, it can be computationally expensive to train.

- StyleGAN3: An improvement over StyleGAN2, offering better image quality and reduced training time. It addresses some of the artifacts and inconsistencies found in earlier versions.

- ProGAN: An earlier model, it paved the way for more advanced GAN architectures. While capable of generating decent results, it’s less sophisticated than newer models.

The ongoing development in this field promises even more realistic and sophisticated fake face generation in the future, highlighting the importance of ethical considerations and responsible use of this technology.

Legal and Regulatory Frameworks

The rise of AI-generated fake faces presents a fascinating legal grey area. While the technology offers exciting possibilities in fields like entertainment and advertising, its potential for misuse – from identity theft to deepfakes used for political manipulation – demands serious consideration of existing laws and the need for new ones. The current legal landscape is a patchwork, varying significantly across jurisdictions, and struggles to keep pace with the rapid advancements in AI.

The creation and distribution of AI-generated fake faces currently fall under existing laws relating to defamation, privacy violations, and intellectual property rights. However, these laws were not designed with AI-generated content in mind, leading to significant gaps and ambiguities. For instance, determining liability when a fake face is used to impersonate someone becomes complex. Is the creator of the AI, the user who deployed it, or the platform hosting the image responsible? These are just some of the questions demanding clear answers.

Current Legal Landscape

Existing laws, such as those protecting against defamation and invasion of privacy, offer some level of protection, but their applicability to AI-generated content is often unclear. For example, laws against defamation usually require proof of malice and intent to harm. However, proving intent when an AI generates a potentially defamatory image autonomously presents a significant challenge. Similarly, privacy laws might offer recourse if a fake face is used to create a non-consensual intimate image, but navigating the legal process can be lengthy and complex. The lack of clear guidelines often leaves victims with limited options for redress.

The Need for New Laws and Regulations

The rapid evolution of AI-generated imagery necessitates a proactive approach to legal frameworks. Existing laws are often inadequate to address the unique challenges posed by this technology, leading to a significant risk of harm. New legislation should specifically address the creation, distribution, and use of AI-generated fake faces, establishing clear guidelines on liability, consent, and acceptable use. A key consideration is the balance between fostering innovation and preventing malicious use. The focus should be on establishing clear rules and penalties while avoiding overly restrictive regulations that stifle technological advancement.

International Legal Frameworks: A Comparison

Different countries have varying approaches to regulating AI-generated content. The European Union, for instance, is at the forefront of developing comprehensive AI regulations, with a focus on transparency and accountability. Their General Data Protection Regulation (GDPR) already provides some level of protection against the misuse of personal data, including images. However, specific regulations tailored to AI-generated content are still emerging. In contrast, some countries may have less stringent regulations, potentially leading to a global disparity in the legal protection afforded to individuals whose images are misused. This lack of harmonization poses challenges in cross-border disputes.

Proposed Legal Framework: Penalties for Misuse

A proposed legal framework should include a tiered system of penalties, ranging from fines to imprisonment, depending on the severity and intent of the misuse. For instance, the non-consensual creation and distribution of AI-generated fake faces for malicious purposes, such as blackmail or fraud, should be met with significant penalties. Similarly, the use of AI-generated fake faces to spread disinformation or influence elections should carry substantial legal consequences. The framework should also address the responsibility of AI developers, platform providers, and users, ensuring clarity on liability and accountability. A clear reporting mechanism, coupled with swift investigative procedures, is crucial for effective enforcement. The penalties should be designed to deter malicious use while promoting responsible innovation.

The proliferation of websites capable of generating realistic fake faces using AI presents a significant challenge to our digital world. While the technology offers potential benefits in areas like entertainment and research, its capacity for malicious use demands immediate attention. From stricter regulations and improved detection methods to increased public awareness, a multi-pronged approach is crucial to navigate this complex landscape. The future of online trust depends on it. The question isn’t just *if* this technology will be misused, but *how* effectively we can prevent it.

Informatif Berita Informatif Terbaru

Informatif Berita Informatif Terbaru